Hiring often feels like navigating a maze without a map. Each turn is guided by gut feeling, leading down paths of inconsistency and, too often, regrettable hires. The core problem isn't a lack of good candidates; it's the absence of a compass to evaluate them objectively.

This is where structured rubrics for interviews provide a clear path forward.

An interview rubric is a standardized scoring guide that ensures every candidate is measured against the same essential criteria, transforming subjective impressions into objective data. This isn't just theory. A landmark meta-analysis found structured interviews are over three times more predictive of job performance than unstructured ones.

Unlike generic recruiting posts, this guide shows real PeopleGPT workflows—not just theoretical advice—to help you find talent that matches your newly defined criteria.

Why Do Traditional Interview Methods Fail Modern Recruiters?

We've all been there: the interview debrief devolves into a debate over vague impressions like "great energy" versus a fuzzy "culture fit." This subjectivity is the root of most hiring mistakes, creating a frustrating cycle that leaves teams misaligned. A well-designed rubric promises a shift to an equitable process where decisions are backed by evidence, not just intuition. The solution is not about creating complex spreadsheets; it’s about establishing a simple, shared language for what excellence actually looks like for a specific role.

Defining Your Path Forward

A hiring rubric is what turns vague job requirements into concrete sourcing criteria you can act on. It forces alignment between you and the hiring manager, defining what is truly essential before you even start sourcing. That alignment is everything.

A simple way to start is by breaking down the role's requirements into three key areas:

- Must-haves: These are the absolute non-negotiables. Think specific skills, certifications, or experience levels required to even do the job.

- Nice-to-haves: These are the preferred qualifications that add real value but aren't deal-breakers. A candidate can succeed without them, but they'd be even better with them.

- Bonus Points: Think of these as the extra indicators of a top-tier candidate. Maybe it’s experience in a super-specific niche or a track record of high performance that makes them stand out.

Beyond the Job Description

You might be thinking, "Doesn't a good job description cover all this?" Not really. Most JDs are written for candidates, not for your internal evaluation team. They list responsibilities but don’t help you weigh one candidate’s strengths against another's.

A rubric becomes your internal compass, keeping your outreach and screening focused. It's also a powerful tool for fairness. By standardizing how you evaluate every single applicant, you actively minimize the influence of unconscious biases that cause great candidates to be overlooked. Understanding and mitigating these biases is crucial for building a more equitable process and avoiding issues related to adverse impact in hiring.

How Do You Build an Effective Interview Rubric?

Building a genuinely effective interview rubric isn't like filling out a template; it's more like architectural design. You're laying the foundation for every hiring decision. A good rubric is the compass that guides every interviewer to the same destination, ensuring the entire structure is sound.

I get it. You might be thinking, "This sounds like a lot of administrative overhead for a process that already feels rushed."

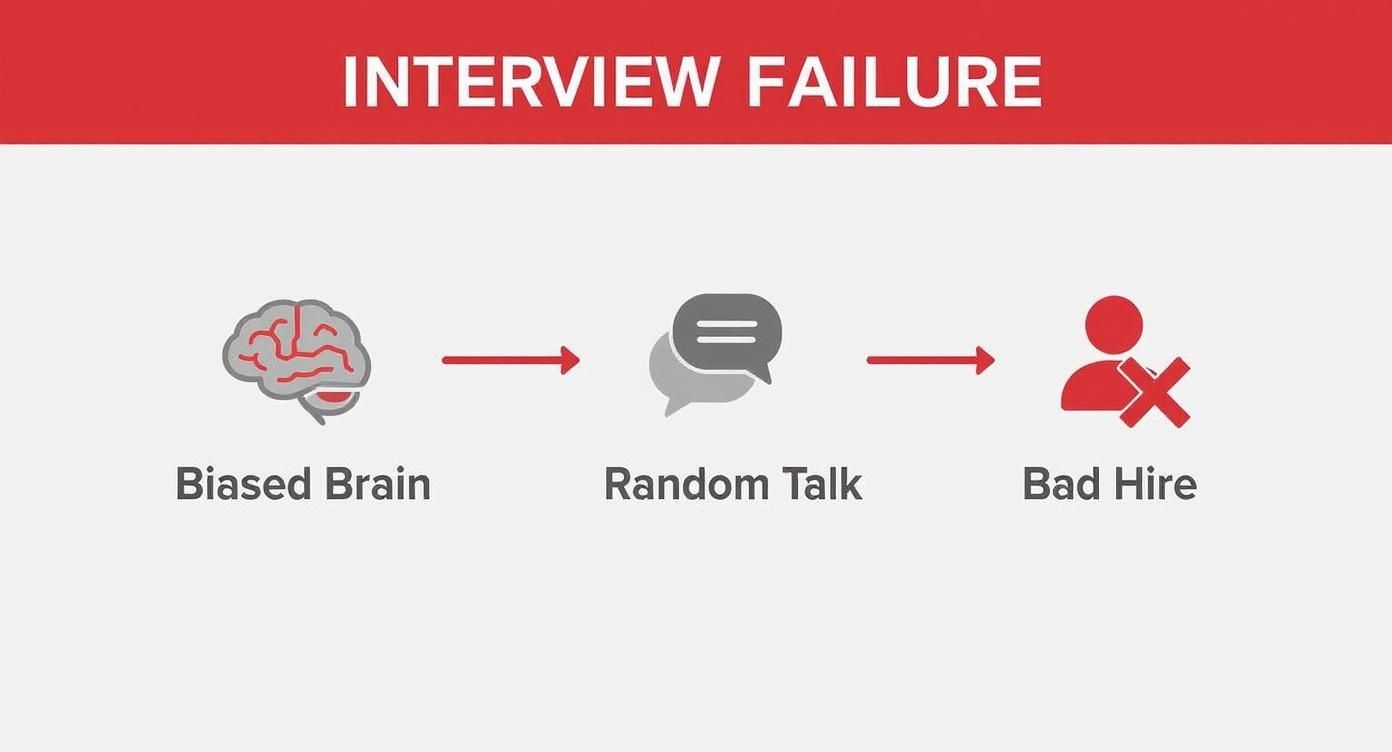

But here’s the thing: a well-designed rubric actually saves time. It dramatically shortens those messy, subjective debriefs, slashes the risk of a costly mis-hire, and brings clarity to the entire process. Without this structure, hiring decisions often fall into a predictable pattern of failure. It starts with individual bias, leads to unstructured conversations, and ultimately ends with a bad hire.

This process breaks down so easily when there isn't a standardized framework to guide the evaluation.

Deconstruct the Role Into Core Competencies

The absolute cornerstone of any great rubric is breaking down the job description into a focused set of core competencies. This isn’t about copying and pasting bullet points from the JD. It’s about translating vague responsibilities into measurable, observable behaviors you can actually score.

Get in a room with the hiring manager and define what success truly looks like for this role. I find it helps to categorize competencies into three distinct buckets:

- Technical Skills: These are the hard, teachable abilities needed to do the job. Think proficiency in Python, experience with HubSpot, or knowing your way around Google Analytics.

- Behavioral Traits: These are the softer, more innate qualities that define how someone works. This includes things like problem-solving, collaboration, adaptability, and their communication style under pressure.

- Cultural Alignment: This is the most nuanced category and often the most misunderstood. It’s not about finding someone you’d want to grab a beer with. It’s about identifying values and work styles that click with your team’s operating principles, like a preference for autonomous work or a high degree of accountability.

This process is a key part of a much bigger talent strategy. To see how this fits into the modern hiring landscape, check out our guide on what is skills-based hiring.

Create a Clear Scoring Scale with Behavioral Anchors

Once you have your competencies, the next move is to build a scoring scale that kills ambiguity. A simple 1-to-5 scale is common, but its real power comes from the behavioral anchors you attach to each score.

These anchors are specific, observable examples of what each rating looks like in action. Without them, a "4" means something completely different to every interviewer. With them, everyone is reading from the same map.

For a "Communication" competency, your anchors might look something like this:

1 (Needs Improvement): Answers are unclear, disorganized, or fail to directly address the question. The interviewer has to constantly re-ask or probe for clarity.

3 (Meets Expectations): Provides clear, logical answers. Listens actively and communicates their thoughts effectively with the interviewer.

5 (Exceeds Expectations): Articulates complex ideas with exceptional clarity and confidence. Proactively structures answers, anticipates follow-up questions, and elevates the conversation.

These anchors are your rubric's secret weapon. They force interviewers to ground their feedback in tangible evidence ("The candidate did X") rather than vague feelings ("I just got a good vibe"). That shift is what makes your hiring process defensible, fair, and far more accurate.

Establish Weights for Each Competency

Let's be honest, not all competencies are created equal. For a senior engineering role, "System Design" is way more critical than "Public Speaking." By assigning weights to each competency, you ensure the team prioritizes what matters most for success in the role.

This simple step prevents a candidate who is exceptional in a low-priority area from overshadowing someone who is strong in the essential, must-have skills. It keeps the evaluation laser-focused on what the job actually demands.

A basic weighting system could be as simple as:

- Critical Competencies: 3x Weight

- Important Competencies: 2x Weight

- Supporting Competencies: 1x Weight

This exercise forces a critical conversation with the hiring team about what is truly non-negotiable versus what is simply nice-to-have. You get immense clarity before the first interview even begins, and the result is a system that guides your team to a smarter, more aligned decision.

To see how these pieces come together, let's look at how a structured rubric transforms the evaluation process. The table below contrasts the old-school, gut-feel approach with the clarity a rubric provides.

Interview Rubric Component Breakdown

ComponentTraditional Approach (Vague)Rubric Approach (Specific & Actionable)Evaluation CriteriaBased on resume and general "fit." Interviewers ask their own preferred questions.Based on pre-defined, role-specific competencies like "Problem-Solving" or "Technical Proficiency."Scoring MethodSubjective gut feelings. "Felt like a strong candidate."A defined scale (1-5) with clear behavioral anchors for what each score means in practice.Feedback CollectionNotes are unstructured, inconsistent, and often focused on personality traits.Feedback is captured directly against each competency, citing specific examples from the interview.Decision-MakingRelies on the most confident or senior person in the room to sway the decision.Decisions are data-informed, based on weighted competency scores and objective evidence.

Methodology: This table contrasts common unstructured interview practices against the best practices for implementing structured interview rubrics as outlined by industrial-organizational psychology research.

How Do You Ensure Fair and Consistent Rubric Implementation?

A perfectly designed rubric is just a blueprint. And a blueprint alone can't build a house—it's useless if the construction crew, your interview panel, doesn't apply it consistently. This is where most rubric initiatives stumble, not in the design but in the real-world execution.

Turning your well-crafted guide into a reliable system is the most critical part of the process. It all hinges on getting everyone to speak the same objective language for evaluating talent, moving past individual interpretations.

Forging Alignment with Interviewer Calibration

The first and most important step here is interviewer calibration. Think of this not as a quick training session but as a hands-on workshop to forge a shared understanding of what each score on your rubric actually means in practice.

Without this, one person's "Exceeds Expectations" is another's "Meets Expectations," which completely undermines the system. The goal is to build inter-rater reliability, making sure that multiple interviewers will land on similar conclusions when they see the same evidence.

Here’s a simple format for a practical calibration workshop:

- Present a Sample Response: Use a recorded mock interview or a written answer to a key interview question.

- Score Independently: Have each interviewer use the rubric to score the sample on their own. No discussion yet.

- Reveal and Discuss: Go around the room and have everyone share their scores for a specific competency.

- Debate the Discrepancies: This is where the magic happens. Dig into why one person scored a 4 while another scored a 2. Force interviewers to point to specific behavioral evidence from the sample to back up their rating.

This process can feel a bit awkward at first, but it quickly gets the team aligned on what separates a "5" from a "3." It solidifies those behavioral anchors and shifts the evaluation from gut feeling to fact-based assessment.

Mitigating Common Rater Biases

Even with a calibrated team, unconscious biases can still creep in and distort evaluations. These mental shortcuts are just part of being human, but you can actively counteract them with awareness and training.

Two of the most common biases that plague interview panels are:

- Central Tendency Bias: This is the habit of scoring most candidates as "average," avoiding the high and low ends of the scale. It often happens when interviewers are uncertain or want to avoid making a tough call, but it masks real differences in performance.

- Leniency/Severity Bias: This is when an interviewer is consistently too easy (leniency) or too harsh (severity) compared to their peers. This skews the data and gives candidates an unfair advantage or disadvantage simply based on who they talk to.

To fight these, encourage your interviewers to use the full range of the scoring scale. During calibration, make a point of discussing what a true "1" looks like versus a "5." Remind them that a rubric isn't about being nice; it’s about being accurate. This is a vital piece of building an equitable hiring process. If you want to dive deeper, check out our detailed guide on diversity hiring best practices.

Integrating Rubrics into Your Workflow

For rubrics for interviews to actually work, they have to be woven into your team's day-to-day workflow. If using the rubric feels like a bureaucratic chore, adoption will plummet, and interviewers will go right back to scribbling unstructured notes on a notepad.

The best way to ensure consistent use is to build the rubric directly into your Applicant Tracking System (ATS). Most modern ATS platforms have features for creating custom scorecards. This keeps the evaluation criteria front-and-center during feedback submission and makes it incredibly easy to compare candidates side-by-side.

This structured approach doesn't just improve fairness; it dramatically boosts accuracy. A study in the Journal of Applied Psychology, for instance, found that using rubrics in hiring leads to a 34% improvement in hiring accuracy while simultaneously reducing bias. That kind of lift gives any organization a significant competitive edge.

By embedding the rubric into the tools your team already uses, you make the right way to interview the easy way. This operational discipline is the final, essential step in turning your rubric from a document into a powerful, consistent, and fair hiring compass.

How Do You Scale Rubrics Across Different Roles?

A single, well-built rubric is a fantastic compass for one hire. But when you’re scaling, that one compass just won’t cut it. You need a full-blown navigation system.

Hiring for dozens of unique roles while keeping quality and consistency high is a monumental task. Without a unified framework, you end up with a mess of disconnected rubrics. Each one reflects a different team's biases and priorities, which slowly dilutes your hiring standards across the board.

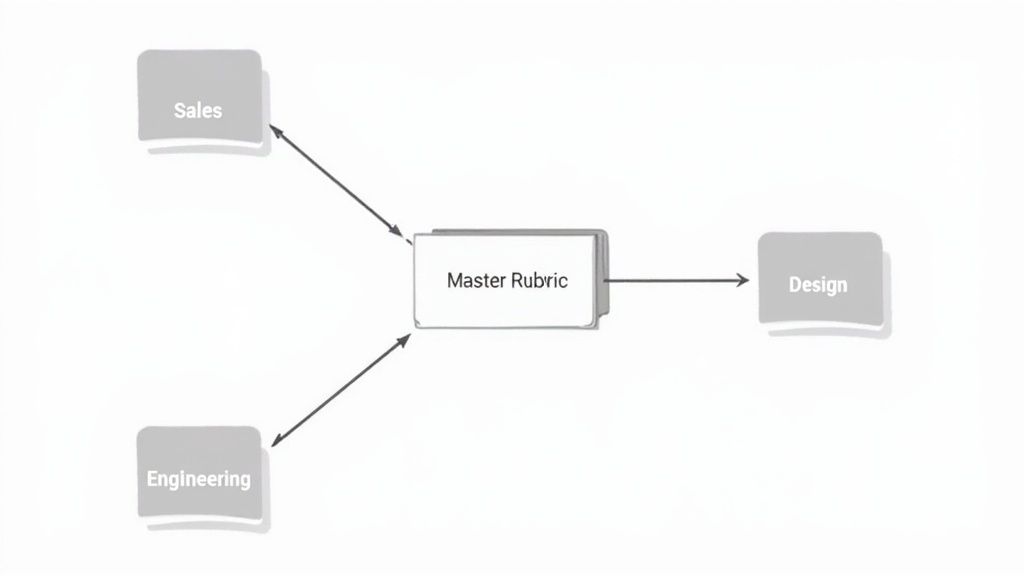

The trick is to build a modular system. You need to balance universal company values with the specific skills each role demands, ensuring every new person strengthens the company as a whole. This is where a "master rubric" comes into play.

As you can see, a central rubric of core values can standardize how you evaluate specific skills across different departments, creating a cohesive system that actually works.

Establish a Core Master Rubric

First things first: you need to define what success looks like at your company, no matter the job title. This is your master rubric—a tight set of 3-5 core competencies that reflect your organization's non-negotiable values and principles.

These aren't technical skills; they are the bedrock behaviors you expect from everyone. Think about competencies like:

- Accountability: Do they own their work and its outcomes, good or bad?

- Curiosity: Are they genuinely driven to learn and challenge the way things are done?

- Collaboration: Do they actively seek out input and make their peers better?

These core traits should be evaluated in every single interview, whether you’re talking to an entry-level analyst or a C-suite executive. It ensures that as you grow, you’re not just hiring for skills—you're reinforcing the cultural foundation of your organization with every offer you make.

This isn't just a hunch; it's a growing trend. Recent survey data shows that nearly two-thirds of employers (64.8%) now use skills-based hiring for entry-level roles, and 73% have built competency-based job descriptions to back it up. Read the full NACE report on skills-based hiring trends.

Create Modular, Role-Specific Add-Ons

Once your master rubric is set, you can build out modular, role-specific "add-ons." This is where you get into the nitty-gritty of the technical and functional skills a specific position requires. The beauty of this system is its flexibility.

Take a core competency like Communication, which is probably on your master rubric. What that actually looks like day-to-day varies wildly between roles:

- For a Sales Role: "Articulates the value proposition with compelling clarity and handles objections persuasively."

- For an Engineering Role: "Documents technical decisions clearly and explains complex system logic to non-technical stakeholders."

This modular approach stops you from reinventing the wheel with every new job req. You just plug the relevant role-specific module into the master rubric. It gives you a consistent framework but allows for crucial customization, perfectly balancing company-wide standards with departmental needs. This is a lifesaver for high-volume hiring, where you need to run efficient batch evaluations of candidates without letting quality slip.

By combining a master rubric with role-specific modules, you create a hiring system that is both scalable and precise. It ensures every hire aligns with core company values while possessing the exact skills needed to excel in their unique function.

Front-Load Your Pipeline with Aligned Candidates

Here’s the thing about even the best interview rubrics: they’re used after a candidate is already in your pipeline. The real magic happens when you use your rubric's competencies to shape your sourcing strategy from the very beginning.

Instead of just scanning resumes for keywords, you can proactively hunt for candidates who have already demonstrated the behaviors and skills you care about. This front-loads your pipeline with high-quality talent that’s already a great match before the first interview even happens.

This is where a tool like PeopleGPT becomes a game-changer. You can take the core competencies from your rubric and translate them directly into a natural language search to find people who fit your exact blueprint.

PeopleGPT Workflow: Sourcing for Rubric Competencies

Prompt: "Find me software engineers who have contributed to open-source data visualization projects, have written technical blog posts about performance optimization, and previously worked at product-led growth companies known for a culture of rapid iteration."

Output:

- A curated list of engineers with profiles showing commits to projects like D3.js or Apache Superset.

- Links to their personal blogs or Medium articles discussing topics like code refactoring and database query optimization.

- Career histories showing experience at companies like Figma, Slack, or Miro.

Impact:

- This search directly sources candidates who exhibit the "Technical Proficiency" and "Curiosity" competencies from your rubric.

- It slashes screening time by finding people whose demonstrated behaviors align with your criteria, not just their resume keywords.

- The candidates who enter your interview process have a much higher probability of success, making your entire funnel more efficient.

FAQs: Interview Rubrics (2026)

Even the best-laid plans can hit a few snags. Rolling out a new process like interview rubrics often brings up some common questions from the team. Let's tackle them head-on so you can get your hiring managers and interviewers on the same page.

How do I get hiring managers to actually use these?

Position rubrics as a tool that makes their lives easier. Explain how a clear rubric eliminates long, subjective debriefs and provides data-backed justification for hiring decisions. Pilot it on one tough-to-fill role to prove its value in action.

Are rubrics just for senior or tech roles?

Not at all. While essential for complex roles, their real power is bringing consistency to every hire. For high-volume or entry-level positions, a rubric helps you quickly and fairly identify candidates with the core skills and potential to grow.

What's the difference between a rubric and a scorecard?

Think of the hiring rubric as the blueprint created before the job is posted; it defines what "good" looks like. The interview scorecard is the tool used during the interview to measure a candidate against that pre-defined blueprint.

The implication of adopting rubrics is clear: you move from being a reactive order-taker to a strategic talent advisor. By embedding this objective compass into your process, you build a more fair, predictable, and high-performing organization one hire at a time.

Sign up for PeopleGPT—it's free